Aaron Swartz, OpenAI, and the Future of Information Control

Echoes of Aaron Swartz, From Guerilla Open Access to Ethical AI

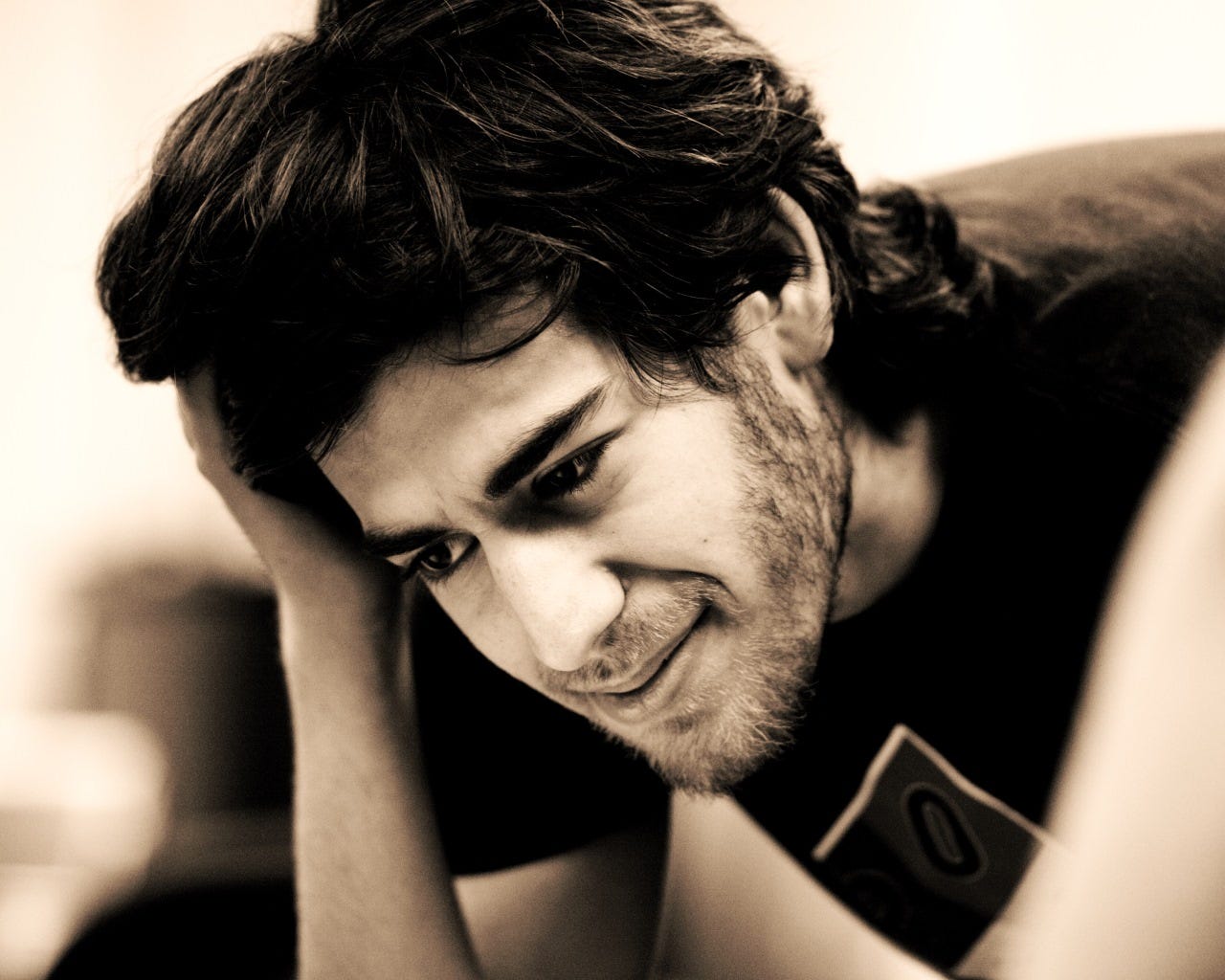

In an era dominated by the rapid advancements of artificial intelligence (AI), the intricacies of data rights and ethics have become pivotal. The contrasting journeys of Sam Altman, CEO of OpenAI, and the late Aaron Swartz offer profound insights into the evolving digital information landscape. Swartz, an internet activist, tragically ended his life on January 11, 2013, while facing federal charges for downloading academic articles from JSTOR. He faced up to 35 years in federal prison. Altman, once a part of the first Y Combinator group alongside Aaron Swartz, now leads OpenAI, known for its groundbreaking AI models.OpenAI's recent legal battle with The New York Times over the use of copyrighted material to train AI underscores a modern dilemma: how do we balance innovation with intellectual property rights? This poignant reminder of the power of information, the cost of fighting for it, and the challenges we face in the uncharted territory of AI, demands a deeper exploration.

OpenAI and Copyright Violations

The New York Times' legal filing on December 27, 2023, in the United States District Court Southern District Of New York, alleges that OpenAI's use of The Times's work to create AI products competes with and threatens the newspaper's ability to provide services. The complaint states, “Defendants’ generative artificial intelligence (‘GenAI’) tools rely on large-language models (‘LLMs’) that were built by copying and using millions of The Times’s copyrighted news articles...” This case highlights the tensions between the cutting-edge innovation of AI and the longstanding principles of copyright law.

Aaron Swartz and the Cost of Open Access

Swartz, whose legacy is woven into the fabric of the internet, co-authored the RSS 1.0 specification, co-founded Reddit, and played a crucial role in developing Creative Commons licenses. Beyond his technical prowess, Swartz was a vanguard for open access, advocating for a world where knowledge was not sequestered behind paywalls. In their book "System Error: Where Big Tech Went Wrong and How We Can Rebout " Stanford Professors Rob Reich, Mehran Sahami, and Jeremy M. Weinstein highlight Swartz's unique approach: “He was less interested in making money than in using technology to change how human beings access and interact with information.” Swartz saw technology as intertwined with politics and believed controlling information was a means to control people. He envisioned liberatory technology fostering liberatory politics.

At a Wikipedia conference, Swartz found inspiration, saying, “At most ‘technology’ conferences I’ve been to, the participants generally talk about technology for its own sake... Here, the primary concern was doing the most good for the world, with technology as the tool to help us get there.”

His prosecution under the Computer Fraud and Abuse Act (CFAA) for downloading academic articles from JSTOR raises critical questions about data accessibility and the legal frameworks governing digital content. The United States Attorney Carmen M. Ortiz said, “Stealing is stealing whether you use a computer command or a crowbar...” This statement became a focal point in the debate on digital rights and the nature of ‘stealing’ in the digital age.

Humanizing the AI Conversation

Focusing solely on Swartz and Altman risks oversimplifying broader issues. However, their experiences underscore the need for clear, equitable regulations in our digital age. They humanize abstract concepts, making them relatable and urgent.

Finding the Balance: From Copyright Law to Digital Freedom

Altman's legal battle reflects current challenges in copyright law as applied to AI training, while Swartz's case remains a sobering reminder of the sometimes-harsh penalties in digital rights violations. Together, they illustrate the fine line between innovation and legality, between public domain and private rights.

A Call for Informed Discourse and Thoughtful Policy

The dialogue on AI and data privacy is incomplete without considering these contrasting outcomes. It is vital to provide context and maintain balance, using their stories as a springboard for broader discussions on AI ethics, data privacy, and regulatory reform.

Healthcare and AI

The potential of AI in healthcare is immense. However, as Sam Altman and Aaron Swartz's experiences demonstrate, there are concerns about data ownership and accessibility. Some have predicted a future dominated by branded LLMs like "Cleveland Clinic LLM, and other trusted medical centers" created with exclusive data sets. This scenario could create a landscape where smaller institutions struggle to compete, hindering innovation and equitable access to knowledge.

Collective Engagement: Wikipedia as a Model

Wikipedia demonstrates how volunteer editing communities can provide reliable, free information. During the COVID-19 pandemic, Wikipedia's coverage was extensive, real-time, and multilingual. This approach could be adopted in healthcare, with open-source platforms for data sharing and LLM development.

Creating a Creative Commons for Healthcare

A system recognizing contributions to health databases, akin to Creative Commons licenses, should include informed consent for using patient data in training models. A sovereign fund, as suggested by Sam Altman's Tools For Humanity, could compensate individuals for their data contributions.

Conclusion: A Legacy for the Digital Age

The stories of Sam Altman and Aaron Swartz collectively underscore the need for a nuanced approach to AI and data rights. Their experiences remind us to tread thoughtfully in the digital world, embracing Swartz's vision of technology as a powerful tool for good. As we navigate AI's uncharted territory, their legacies urge us to engage actively and responsibly, shaping a digital future that honors their contributions and visions. Swartz's poignant reminder of the power of information and the cost of fighting for it is more relevant than ever: “Transparency can be a powerful thing, but not in isolation... Let’s decide that our job is to fight for good in the world."

The Trial of Aaron Swartz 2024 a work in progress utilizing Chat GPT.

Aaron Swartz, a programmer and activist, took his own life by hanging on Jan. 11, 2013 at the age of 26. At the time of his suicide, Aaron Swartz had been indicted and was facing up to 35 years in jail for violating the Computer Fraud and Abuse Act of 1984 (CFAA). Aaron had hooked up his lap top computer to the MIT computer network and downloaded articles from the JSTOR data base in violation of the terms of services. His trial was scheduled for April 2013, needless to say the trial will not take place in the court of the US legal system. This play carries out the trial on stage.

The Play

The trial of Aaron Swartz, USA 2024 continues the conversation that the death of Aaron Swartz started. The trial challenges each one of us to explore our responsibility as members of society, our relationship to each other and to the power of government and organizations. It provides an experiential exercise for citizenship that addresses laws we are called upon to obey. The play casts the audience, as jurists, where we must be as vigilant observers of our government. The play addresses complex issues that continue to evolve in the digital age. It is about the role of information and digital technology in our democracy.

Interesting that Swartz faced criminal prosecution while the Altman conflict is civil.